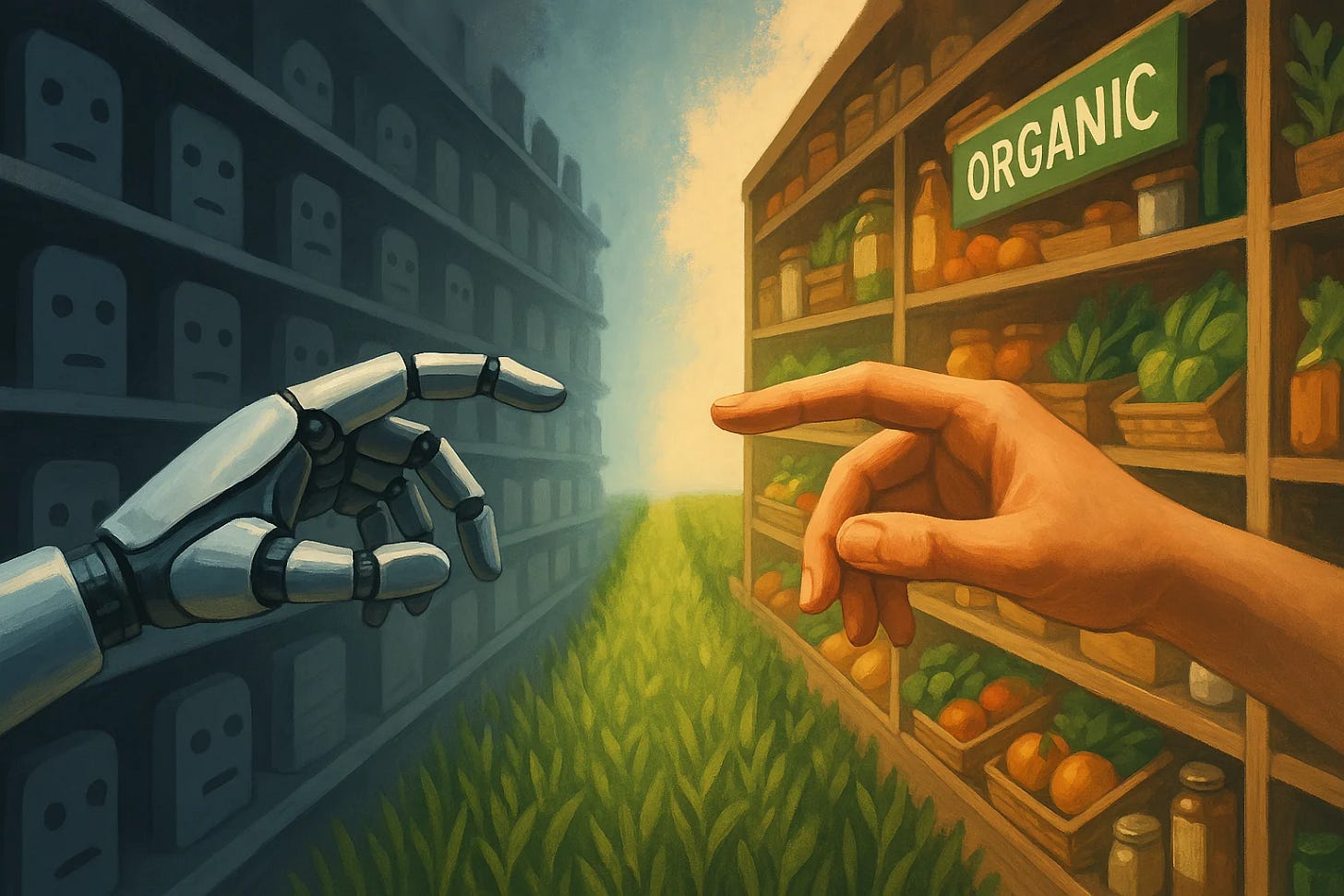

Is AI Having It's Whole Foods Moment?

The Emergence of Ethical AI in Creative Technology

Link to longer conversation on Youtube, Spotify, and Apple. Follow us on Instragram.

In the rapidly evolving world of artificial intelligence, a fascinating market dynamic is emerging that bears striking resemblance to what happened in the grocery industry decades ago. Just as Whole Foods created a premium category based on ethical sourcing and organic ingredients, we’re now witnessing the rise of AI companies explicitly positioning themselves as ethical alternatives in the creative technology space. But there’s a twist: unlike the grocery industry where organic was the disruptive newcomer, in AI, it’s an “inverted Whole Foods situation” where established studios represent the organic position while AI is the controversial technology claiming territory.

Companies like Asteria with their recently released Moonvalley Marey model have emerged with significant financial backing—reportedly around $70 million—promoting themselves as the ethical choice in AI content generation. Industry figures like Paul Trillo and Don Allen Stevenson III have aligned themselves with these efforts, lending credibility to the ethical positioning. Similarly, Adobe Firefly, Shutterstock, and Getty have staked out clear positions on licensing and ethical training data.

These companies aren’t just making moral arguments—they’re creating a market category. The message is clear: creators who care about ethics should choose these platforms, even if it means paying a premium or accepting potential limitations.

Ethics as Business Strategy

While the moral positioning is prominent, there’s an undeniable business calculation at work. If lawsuits, regulations, or cultural backlash eventually restrict models trained on unauthorized data, companies promoting “clean” approaches will be perfectly positioned to capture the market.

This dual motivation mirrors how Whole Foods created both a moral high ground and a premium business category simultaneously. The strategy is clever: position yourself as the ethical alternative before regulations potentially force everyone in that direction.

What makes this particularly interesting is that while Whole Foods disrupted established grocery chains, these AI companies are positioning themselves as protectors of creative industry values against disruption—while simultaneously being part of that very disruption.

The Quality vs. Ethics Dilemma

A central tension in this emerging landscape is whether ethically trained models can match the quality of those trained on larger, less discriminating datasets. In theory, models with access to more training data—regardless of licensing or permission—may produce superior results. This creates a potential market bifurcation where larger studios might use ethical models while smaller creators gravitate toward whatever produces the best output.

This tension reveals a key question: will creators choose ethics over quality when their livelihoods depend on competitive output? Many leading creators simply use whatever tools provide the best expression of their creativity, making whatever it takes to achieve their vision.

Yet examples of high-quality work created with ethical models demonstrate that the quality gap may not be insurmountable. Projects from ethical AI proponents serve as proof points that impressive results are possible within ethical constraints.

Will Consumers Care How Their Content Is Made?

Perhaps the most critical question is whether consumers will ultimately care how their entertainment is produced. History suggests we might vote with our wallets in ways that don’t always reflect our stated ethical concerns.

A revealing parallel exists with manufacturing: despite widespread concerns about labor practices or geopolitical tensions with certain countries, most consumers haven’t significantly changed their purchasing behavior for electronics and other technology products.

This pattern echoes how organic food remained a niche category for decades before broader adoption. The difference is that with creative content, the production process is even less visible to consumers than food ingredients. Unless explicitly labeled or marketed as “ethically produced AI,” will viewers even notice or care about how their entertainment was created?

The “No AI” Badge of Honor

Interestingly, we’re witnessing the emergence of what amounts to an “organic certification” for traditional media production—the proud declaration that no AI was used. James Cameron has declared that the upcoming Avatar sequel used no AI. Conan O’Brien referenced it at the Oscars. Some film festivals explicitly exclude AI-generated content.

This mirrors organic certification in food, creating a binary distinction that producers can use to differentiate themselves. The question is whether this distinction becomes a permanent feature of the industry or merely a transitional reaction to new technology.

The irony is that unlike food labeling which promotes what’s included (organic ingredients), the “clean” designation in AI is primarily about what’s excluded (unauthorized training data). It’s a defensive position rather than an affirmative one.

Beyond Technical Perfection: The Uncanny Valley Problem

Beyond the ethical/unethical binary lies another dimension: the persistent challenge of the uncanny valley in AI-generated content. While AI systems can produce technically impressive visuals, they often struggle to cross the final threshold of human-like authenticity that resonates emotionally with audiences.

This introduces a factor that transcends both ethics and technical quality—the human connection that comes from authentic expression. Currently, AI systems have no parameter for authentic emotional resonance. The technology may execute technical aspects flawlessly while still leaving viewers in the uncanny valley where something feels subtly but importantly off.

This raises a profound question: does authentic connection matter more than ethical sourcing? If consumers ultimately value emotional resonance over technical perfection or ethical considerations, the market may develop in unexpected ways that favor neither “clean” nor “dirty” models, but those that best bridge the uncanny valley.

Economic Realities and Structural Challenges

Hard economic realities may ultimately determine market outcomes regardless of ethical positioning. The visual effects industry offers a cautionary tale, having been fundamentally transformed by offshoring to operations in countries where labor costs are significantly lower.

This comparison suggests that regardless of ethical positioning, market forces may drive creativity toward lower-cost options. The pattern has held before: economic factors often outweigh ethical considerations in market decisions.

This raises questions about the sustainability of premium-positioned ethical models in a global marketplace. Will they face the same pressures that transformed other creative industries? Currently, most AI creators make money through client work rather than content creation, indicating the ecosystem is still nascent and unstable.

What Happens When the Dust Settles?

A fascinating question emerges about market consolidation: what happens if companies with less stringent data practices acquire ethically positioned models? How would the artists and developers who joined these companies specifically for their ethical stance respond to such consolidation?

The resolution of pending lawsuits will also shape the landscape. Rather than shutting down entire categories of products, they might establish frameworks for licensing and compensation going forward, creating a structure within which all companies must operate.

The Future of Ethical AI: Beyond the Supermarket Analogy

The Whole Foods comparison offers both insight and limitations. Like organic food, ethical AI represents both a genuine moral position and a strategic market differentiation. Just as Whole Foods created a premium category that eventually influenced mainstream grocery chains, ethical AI may establish practices that become industry standards.

However, the inverted nature of the AI disruption creates different dynamics. Rather than a scrappy newcomer challenging established players on ethical grounds, we have well-funded AI startups attempting to position themselves as the responsible choice while simultaneously disrupting established creative industries.

What remains to be seen is whether consumers, creators, and distributors will prioritize ethical considerations or if market forces will ultimately favor whatever produces the most compelling content at the best price. Will ethically trained models remain a premium alternative, become the industry standard through regulation, or eventually be absorbed by market consolidation?

Whether this becomes AI’s true “Whole Foods moment” depends on how the industry navigates the tensions between ethics, quality, authenticity, and economics in the coming years. For now, we’re witnessing the early days of a fascinating experiment in whether ethical positioning can create sustainable value in a rapidly evolving technological landscape.

(Written with Claude. All Images generated on Sora)

Great post as we desperately need more conversation in between "ban all AI art" and "lol at salty artists". It's a nuanced topic that deserves nuance.

I'm incredibly skeptical that any "ethical" AI attempts will succeed. Market forces win 99% of the time, as you suggest may happen. Though I don't think Whole Food analogy is completely apt because Whole Food is selling ethics _and_ health. People don't just want organic because it's more ethical but because they think it's better food. Consumers are ultimately largely self-centered and want the best thing.

So if they want the best thing, is that human art or AI art. I'd say almost certainly AI art if you count a talented human artist who includes AI in is toolchain as AI art, because having additional tools at your disposal is unlikely to make you worse (if used appropriately). So people will want AI art.

I also find the conversation on this topic very clouded by the culture wars and incentives. Certainly VCs and AI startup execs have incentive to over-hype the positives and downplay the negatives. But there's also a dynamic where social media has become polarized so people feel forced to choose a "side". So when someone is upset about AI art, it's unclear if they're an artist genuinely concerned about plagiarism and economic impact, or just a terminally online complainer who wants to criticize anything associated with Silicon Valley. I feel a lot of pushback to AI art is effectively a social media fad - not all of it, but a lot of it.

We're probably zoned in on artists right now, and to a lesser extent coders, because that's what the models are generating right now, but I have an odd suspicion that in the medium term, artists and engineers might be the _safest_ from AI, precisely because the AI is a remix machine, and needs something to remix. My first pass at AI art seemed amazing, but I quickly ran into the limitataions, realization you need to give it references images and train LoRAs. And so I ask - who will make reference images?

Whether in art or engineering, I see AI needing some sort of human "spark", and there still may be demand for human spark. I see artists as being much safer than many office jobs that involved more rote work precisely because they can provide that spark.

But it's so difficult to predict how things play out. The only thing I feel strongly about is we need more viewpoints and options between "let's just do what tech execs want with no pushback" and "ban it all , we hate new technology". Hopefully there is a happier middle path.

I think - clean pushes mainstream ,

Cutting edge pushes the new industry.

Absolutely loved listening to this.

You guys get it 🙏